A study of interacting Bayesian recurrent neural

networks with incremental learning

__________________________________________________________________________

En

studie av interagerande Bayesianska artificiella neuronnät med gradvis

inlärning

Christopher Johansson

MSc thesis in computer science,

2D1021

Abstract

This thesis investigates the properties of

systems composed of recurrent neural networks. Systems of networks with

different time-dynamics are of special interest. The idea is to create a system

that posses a long term memory (LTM) and a working memory. The working memory

is implemented as a memory that works in a similar way to the LTM, but with

learning and forgetting at much shorter time scales. The recurrent networks are

used with an incremental, Bayesian learning rule. This learning rule is based

on Hebbian learning. In this thesis there is a thorough investigation of how to

design the connection between two neural networks with different time-dynamics.

Another field of interest is the possibility to compress the memories in the

working memory, without any major loss of functionality. In the end of the

thesis, these results are used to create a system that is aimed at modeling the

cerebral cortex.

Sammanfattning

I denna rapport kommer egenskaperna

hos system uppbyggda av återkopplade neuronnät att undersökas. System

uppbyggda av nätverk som arbetar på skilda tidsskalor är av speciellt intresse.

Målet är att skapa ett system med ett långtidsminne och ett arbetsminne.

Arbetsminnet kommer att realiseras på samma sätt som ett långtidsminne, men det

kommer att arbete med mycket kortare tidsskalor. De återkopplade neurala nätverken kommer att tränas med en

inkrementell, Bayesiansk inlärningsregel. Inlärningsregeln är baserade på

Hebbsk inlärning. I rapporten finns en noggrann undersökning av hur man kan

koppla ihop två neurala nätverk. Jag kommer också att undersöka möjligheten att

komprimera representationen i arbetsminnet, utan att det medför sämre

prestanda. I slutet av rapporten kommer jag att använda resultaten från början

av rapporten, till att skapa ett system

som efterliknar hjärnbarken.

Preface_______________________________

Acknowledgements

and general information

This master project in computer science was

preformed at SANS, Studies of Artificial Neural Systems, a department in NADA,

Numerical Analysis and Computer Science, at the Royal Institute of Technology,

KTH. The work was done during the autumn of year 2000. Professor Anders

Lansner, head of the SANS research group, was the examiner of the project.

I would like to thank Anders Sandberg for his

help and support during the project. Anders S. managed to explain all questions

I had during the work on the project. He also taught me a diverse set of new

knowledge. Anders Lansner woke my interest on the subject of research, and he

has all through the work been encouraging. I also would like thank everyone

else in the SANS group for an inspiring environment; Örjan Ekeberg , Erik

Fransén, Pål Westermark, Peter Raicevic, Anders Fagergren, Jeanette Hellgren

Kotaleski, Alexander Kozolov, Erik Aurell.

1.0 Introduction

It’s fairly clear that the brain

posses several kinds of memory processes. These can be divided into two major

categories, long-term memory (LTM) and short-term memory (STM). This thesis

will focus on how the STM can be constructed. The thesis will also be concerned

with the interaction between LTM and STM and the effects that arise in systems

that are comprised of these two sorts of memories.

Long-term memory processes have

for a long time been considered to reside in the synapses. Long-term

potentiation (LTP) and long-term depression (LTD) have been observed to occur

in synapses. LTP and LDP in the synapses are thought to constitute the

long-term memories that we posses [1]. Based on these observations, parallels between

artificial neural networks and populations of nerve cells have been made. Based

on these ideas, an artificial neural network (ANN) can be used as a memory.

This type of ANN is called attractor networks. Attractor networks have been

suggested to constitute a good model of how the LTM memory works [2-6].

It is a common view in the

research community that short-term storage of memories is based on the current

activity in the brain. In this view each short-term memory resides in the brain

as a mode of an activity-wave [7]. The mode of this activity-wave can swiftly be

changed to accommodate new memories or to forget old memories.

In this thesis, a different view

is adopted of how the short-term memory processes is attained. In the presented

view, the short-term memories are stored in the synapses between neurons. It

means that the short-term memory process is similar to the long-term memory

process, but it work on a shorter time scale. In this thesis, I have used an

attractor network with high plasticity, to simulate the STM.

The main focus of the thesis was

to establish that an attractor network with high plasticity could be used as a

model of STM, and that the STM could function as a working memory.

The most important function of a

STM is to hold information about the current situation. The STM also needs to

be able to swiftly change its associations as new information arrives. The STM

does not need to capture the details of the arriving information. The details

are captured and stored by the LTM.

This means that it’s more important for the STM to make the correct

associations to the pattern in LTM than to be able to store the complete

pattern.

An important question is how the

memories stored in the LTM most effectively can be represented in the STM. The

representation of memories in the STM does not need to be identically to the

representation of the memories in the LTM. A requirement to make this possible

is that there is a distinct connection from each memory in the STM to the

corresponding memory LTM. Several different methods can be used to create the

compressed memories of the STM and associate these STM memories to their

corresponding memories in the LTM.

A central concept is working

memory. Working memory is a concept used in cognitive psychology. The term,

“working memory”, was introduced by Alan Baddely [8]. This thesis shows how the STM, based on the Bayesian

neural network, can be used as a working memory. In systems with a working

memory, short-term variable binding (STVB) can be made. STVB is some times

referred to as role filling. STVB is a basic function, needed to

construct a logical reasoning system.

The basic function of an

auto-associative memory is, as the name suggests, to associate the input to one

of the stored memories. If an input pattern is presented to the auto-associative

memory, the memory will respond with the stored memory that most closely

resembles the input. The associative memories in this thesis were implemented

with attractor networks. Attractor networks are constructed with recurrent

networks of artificial neurons. In the recurrent network, each neuron has

connections to all other neurons. Each

of these connections is equipped with a weight, which controls the influence

between the neurons. The connection weights form a matrix. The attractor network

stores the patterns by altering the values of the weights [9].

The attractor networks were

constructed with artificial neurons. The artificial neural networks were

implemented with a palimpsest,

incremental, Bayesian learning rule [10]. This Bayesian learning rule allows the user to

control the temporal properties of plasticity in the network by the

modification of a single parameter. Sandberg et al at SANS, KTH have developed

the incremental, Bayesian learning rule.

Chapter 2 contains a basic introduction to

cognitive neuroscience. There is a description of how memories are categorized

into explicit and implicit memories. The chapter also contains an overview of

the anatomy of the nervous system. Briefly the anatomy and function of nerve

cells are presented. A short

presentation of artificial neural networks is given. A closer look at attractor

networks and the Hopfield model is made. An overview of associative memories is

given, as they constitute a central concept in this thesis. Then, the

incremental, Bayesian learning rule is presented. A biological interpretation

of Bayesian learning rule is also made. Finally, some interesting concepts that

can be found in auto-associative memory systems are presented.

In chapter 3 the implementation of the Bayesian

network model is presented. The physical realization of the neural networks is

presented along with the choice of parameter values. The basic behavior of

attractor networks based on the Bayesian learning rule is presented. The

environment, in which the networks operate in, is also presented along with how

the networks were tested.

The concern of chapter 4 is to present how a

system can be made out of two networks and how these networks can be made to

cooperate. A few basic design ideas are tried and studied.

The focus of chapter 5 is how the

representation of the memories can be compressed in the STM. A couple of

different alternatives are studied. Then, interest is turned to some important

functions that can be found in a system composed of a LTM and STM.

Finally, in chapter 6, there is a demonstration

of how a working memory can be useful. STM is used as working memory in the

systems. The systems are presented with a task that requires the use of both a

LTM and STM. It is also shown how larger systems can be built with the use of

smaller modules. The modules were constructed out of a single LTM and STM.

2.0

Background

During the latter part of the 20th

century, the study of the brain moved from a peripheral position within both

the biological and psychological sciences to become an interdisciplinary field

called neuroscience that now occupies a central position within each discipline.

This realignment occurred because the biological study of the brain became

incorporated into a common framework with cell and molecular biology on the one

side and with psychology on the other. In recent years, neuroscientists and

cognitive psychologists have recognized many important distinctions of

different sorts of memory. There is a lot of speculations to how the memory is

constructed and what functions it has. Since the memory is a very integrated

system, it’s hard to test specific parts / properties of it. The mixture of two

disciplines is one of the reasons why there are so many ideas to how the memory

is constructed, and the jungle of terminology surrounding the subject.

The different memory systems

have been distinguished according to several attributes or criteria. Here are

some of the more important differences; The content or kind of information

those systems mediate and store (Episodic / Semantic / Procedural memory) and

how they store and retrieve that information (Explicit / Implicit memory).

Another distinction is the memory’s storage capacity and the duration of the

information storage (LTM / STM).

Figure 1 An illustration of how the

properties of memory can viewed to be orthogonal. The horizontal axis represents

the time span of the memories and the vertical axis could be said to represent

awareness of the memories.

LTM can be thought of as a

sturdy memory with almost unlimited capacity. The LTM is thought to reside in

the different receptive areas of the cerebral cortex [11]. A closer description of the receptive areas of the

cerebral cortex is presented in section 2.2. LTM can be seen to be composed of

two different types of memory, declarative memories and nondeclarative

memories. An example of declarative memory is the name of your mother, while

your cycling skill is a nondeclarative memory. The time scale for long-term

memory operations ranges from minutes to years. The time span of a memory depends

on a number of factors. One of the most important factors is the number of

times the memory is presented to you.

The concept of a STM has been

around for a long time. The time scale of short-term memory operations ranges

from less than a second to minutes. It’s an appealing idea that it exists some

sort of temporary memory storage where sensory impressions could temporarily be

stored before they are processed or before they become consolidated into LTM.

Several kinds of STM have been described, again mainly on the basis of

storage-time distinctions and phenomenal or neuropsychological data. The

shortest of STM would be iconic memory [12], which has the capacity to retain a visual image for

up to 1 second after presentation. Echoic memory is used to store sounds, and

has a slightly longer time span then iconic memory. Immediate memory

would last a few seconds longer. Although different STM have been proposed, I

will not deepen the discussion into the subject of different kinds of STM,

instead I will adapt a broader view of the subject.

The definition of STM that

transcends the temporal criterion is working memory. Working memory is a

concept of STM that derives from cognitive psychology [8]. Working memory is thought to be a temporary storage

used in performance of cognitive behavioural tasks, such as reading, problem

solving, and delay tasks (e.g., delayed response and delayed matching to

sample), all of which require the integration of temporally separate items of information.

Baddeley have more recently developed his view of working memory, and he now

states that it constitutes of a phonological loop, a visuospatial sketchpad and

the central executive [8].

Figure 2 A hierarchic view of the

constituents of explicit and implicit memory. Explicit memories, are memories

that you are aware of. Implicit memories are memories you possess, but are not

aware of. Explicit memories can be divided into two categories, episodic and

semantic memories. Episodic memories are whole scenarios. Semantic memories are

lexical memories i.e. words. A form of implicit memory is procedural memory. As

mentioned earlier, the group of implicit memories are memories you are not

aware of i.e. the skill of cycling.

Explicit (or declarative) memory is the memory

of events and facts; it is what is commonly understood as personal memory. One

part of it contains the temporally and spatially encoded events of the

subject’s life for which reason it has alternately been called episodic

memory [13, 14]. Another part contains the knowledge of facts that

are no longer ascribable to any particular occasion in life; they are facts

that, through single or repeated encounters, the subject has come to categorize

as concepts, abstractions, and evidence of reality, without necessarily

remembering when or where he or she acquired it. This is what Tulving has

called semantic memory [14].

Implicit (or nondeclarative)

memory, the counterpart of declarative memory, is a somewhat difficult concept

to grasp. It can be viewed as the memory for development of motor skills

although it encompasses a wide variety of skills and mental operations. Cohen

and Squire called this type of memory procedural memory [15]. Implicit memory can also be viewed as the influence

of recent experiences on behaviour, even though the recent experiences are not

explicitly remembered. For example, if you have been reading the newspaper

while ignoring a television talk show, you may not explicitly remember any of

the words that they used in the talk show. But in a later discussion, you will

more likely use the words that they used in the talk show. Psychologists call this phenomenon priming,

because hearing certain words “primes” you to use them yourself.

The nervous system consists of

the central nervous system and the peripheral nervous system. The central

nervous system (CSN) is the spinal cord and the brain, which in turn include a

great many substructures. The peripheral nervous system (PNS) has two

divisions: the somatic nervous system, which consists of the nerves that convey

messages from the sense organs to the CNS and from the CNS to the muscles and

glands, and the autonomic nervous system, a set of neurons that control the

heart, the intestines and other organs.

The brain is the major component

of the nervous system and it is a complex piece of “hardware”. Weighing

approximately 1.4 kilogram in an adult human, it consists of more than 1010

neurons and approximately 6*1013 connections between these neurons [16]. The struggle to understand the brain has been made

easier because of the pioneering work of Ramón y Cajál [17], who introduced the idea of neurons as structural

constituents of the brain. I will now make some comparisons that are far from

valid but although quite illustrative. Typically, neurons are five to six

orders of magnitude slower than silicon logic gates; events in a silicon chip

happen in the nanosecond (10-9 s) range, whereas neural events

happen in the millisecond (10-3 s) range. However, the brain makes

up for the relatively slow rate of operation of a neuron by having a truly staggering

number of neurons with massive interconnections between them. Although the

brain constitutes an incredibly large number of neurons, it’s still very energy

efficient. The brain use approximately 10-16 joules per operation

per second, whereas the corresponding value for the computers in use today is

about 10-6 joules per operation per second [18]. If one makes the assumption that the brain consumes

400 kg-calories/24h, the brain has an effect of 20 watts, which is equal to a

modern processor.

What sets neurons apart from

other cells are their shape and their ability to convey electrical signals. The

anatomy of a neuron can be divided into three major components: the soma (cell

body), dendrites and an axon. The soma

contains a nucleus, mitochondria, ribosomes and the other structures typical of

animal cells. Neurons come in a wide variety of shapes and sizes in different

parts of the brain. The pyramidal cell is one of the most common types of

cortical neurons. The typical pyramidal cell can receive more than 10,000

synaptic contacts, and it can project onto thousands of target cells. Axons are

the transmission lines from the soma to the synapse, and dendrites are the

transmission lines from the synapses to the soma. These two types of cell

filaments are often distinguished on a morphological ground. An axon often has

few branches and greater length, whereas a dendrite has more branches and

shorter length. There are some exceptions to this view. Some dendrites contain

dendritic spines where specialized axons can attach [19].

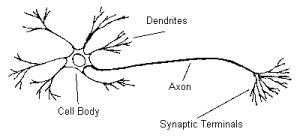

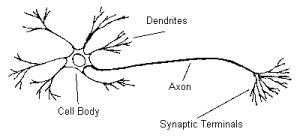

Figure 3 A pyramidal

cell, which is the most common type of nerve cell in the cerebral cortex. The

pyramidal cell is here depicted with only its most important filaments, the

dendrites, the axon with its synaptic terminals, and the cell body. [Figure 3]

Synapses are elementary

structural and functional units that mediate the interactions between neurons.

The most common kind of synapse is a chemical synapse. A presynaptic process

liberates a transmitter substance that diffuses across the synaptic junction

between neurons and then acts on a postsynaptic process. Thus a synapse

converts a presynaptic electrical signal into a chemical signal and then back

again into a postsynaptic electrical signal. It is assumed that a synapse is a

simple connection that can impose excitation or inhibition, but not both on the

receptive neuron. It is established that the synapses can store information

about how easily signals should be able to pass through. The process that

account for this ability is LTP (Long Term Potentiation). In the case of

inhibitory synapses there is a similar process called LTD (Long Term

Depression) [1].

The majority of neurons encode

their outputs as a series of brief voltage pulses. These pulses, commonly known

as actionpotentials or spikes, originate at or close to the soma (cell body) of

neurons and then propagate across the individual neurons at constant velocity

and amplitude. The reasons for the use of actionpotentials for communication

among neurons are based on the physics of axons. The transportation of the

actionpotentials is an active process. The axon is equipped with ion-pumps,

that actively transport K+, Na2+, Cl-, ions in

and out through the axon’s cell membrane. The active transportation of

actionpotentials is necessary when the axons span great distances otherwise the

actionpotentials would be too reduced. If the actionpotentials are too reduced

when they reach the end of the synapses, they are not able to initiate the

release of transmitter substances. The myelin or fat that surrounds the axons

lessen the reduction of the actionpotentials.

The surface of the forebrain

consists of two cerebral hemispheres, one on the left side and one on the

right, that surround all the other forebrain structures. Each hemisphere is

organized to receive sensory information, mostly from the contralateral side of

the body, and to control muscles, mostly on the contralateral side, through

axons to the spinal cord and cranial nerve nuclei. The cellular layers on the

outer surface of the cerebral hemispheres form grey matter known as the

cerebral cortex. Large numbers of axons extend inward from the cortex, forming

the white matter of the cerebral hemispheres. Neurons in each hemisphere

communicate with neurons in the corresponding part of the other hemisphere

through the coreus callosum, a large bundle of axons.

Figure 4 The cerebral cortex of a human

brain. In the picture the cortex has been divided into it’s major functional

areas. There are descriptions of the cortex, where the cortex is divided into

50 functional areas or more. [Figure 4]

The cerebral cortex has a very

versatile functionality. At a glance, the cortex seems to be structurally very

uniform. This suggests that the functionality of the cortex is very general,

but we know that different areas of the cortex handle specific tasks. This is

supported by the fact that microscopic structure of the cells of the cerebral

cortex varies substantially from one cortical area to another. The differences

in appearance relate to differences in the connections, and hence the function.

Much research has been directed toward understanding the relationship between

structure and function. The sensory and motor cortical areas have been found to

have a hierarchy order. In the case of the sensory cortical areas, there are

lots of connections from the higher order sensory areas to the prefrontal

cortex. In the case of the motor cortical areas, there are lots of connections

leading from the prefrontal cortical area to the higher order motor cortical

areas [20].

Figure 5 The cerebral cortex is divided

into to six layers. Layer 2&3 is often considered as a single layer. There

is also a thought of a division of the cortex into columns vertical to the

layers of cortex. The existence of these vertical modules is highly debated.

[Figure 5]

In humans and most other

mammals, the cerebral cortex contains up to six distinct laminas, layers of

cell bodies that are parallel to the surface of the cortex. Layers 2 and 3 are

usually seen as one layer. Most of the incoming signals arrive in layer 4. The

neurons in layer 4 sends most of their output up to layers 2 & 3. Outgoing signals

leaves from layers 5 and 6. In the sensory cortical areas the cells or neurons

with similar interests tend to be vertically arrayed in the cortex, forming

cylinders known as cortical columns. The small structures, called mini-columns,

are about 30 mm

in diameter. These columns are summed

up into larger structures called hypercolumns that are about 0.4 -1.0 mm. In

the artificial neural network used to run the simulations described later on,

there will be a similar concept to the hypercolumns. Outside the sensory areas

the structures of the columns are less distinct. Each column in a hypercolumn

can be seen to perform a small and specific piece of the work that is preformed

by a hypercolumn. Within a hypercolumn the communication between the columns constituting

the hypercolumn, is very intensive [21].

Neural networks are very

interesting, because they work in a completely different way than a

conventional digital computer does. Neural networks process information, using

a vast number of non-linear computational units. This means that the

computations are done in a non-linear and highly parallel manner. A

conventional computer, based on the von Neumann machine, often only uses one

computational unit, hence process the information in a sequential manner. It is

often said that neural networks are superior to the standard von Neumann

machines. This isn’t true, but it’s a fact that neural networks and von Neumann

machines are good at different forms of computations [22].

The neural networks used in this

thesis were implemented on regular desktop computers. This is usually the case,

since it’s much easier to construct an implementation in software than in

hardware. A hardware implementation of a neural network is more resource

efficient.

An associative memory is a

memory that stores its inputs without labelling them (memories aren’t given an

address). To recall a memory you need to present the associative memory with an

input similar to the memory you want to retrieve. There are two types of

associative memories, auto-associative memories (which are sometimes

also referred to as content addressable memories) and hetro-associative

memories. When fragmented pattern is presented to an auto-associative

memory, the memory tries to complete the pattern. If a fragmented pattern is

presented to a hetro-associative memory, the memory tries to associate the

presented pattern to another pattern. Note that all the associations are

learned in advance [23].

The basic idea behind an

auto-associative memory is very simple. Each memory is represented by a

pattern. A pattern is a vector containing N binary values corresponding to the

states of the N neurons. When an auto-associative memory has been trained with

a set of P patterns { xm } and then presented with a new pattern xP+1,

the auto-associative memory will respond with producing whichever one of the

stored patterns most closely resembles xP+1. This could of course be

done with a conventional computer program that computes the Hamming distance

between pattern xP+1 and each of the P stored patterns. The Hamming

distance between two binary vectors is the number of bits that are different in

the two vectors. But if the patterns are large, and very many (these two

attributes usually come together), the auto-associative memory with its highly

parallel structure will be immensely faster then the conventional computer

program. An example of application is image recognition. Imagine that you

receive a very noisy image of your house, if this image previously has been

stored in the auto-associative memory, the memory will produce a reconstruction

of the image.

Associative memories have more

nice features then just noise removal. Associative memories have the very

import ability to generalize. This makes it possible for associative memories

to handle situations where they are presented with memories never before

encountered. Another side of generalization is categorization of memories. This

is also a feature handled by associative memories. Categorization means that

similar memories are stored as one memory. The common features of the memories,

stored in the same category, are stored robustly and are easy to retrieve. The

individual details of each memory in the category leave only a minor trace in

the memory. When a memory is retrieved from the category, it is very likely to

posses the details of the most recently stored memory.

The workings of the associative

memory are usually explained by an energy abstraction. In this abstraction, the

memories stored in the associative memory, constructs an energy landscape. The

energy landscape has as many dimension as the stored memories have attributes.

This often means that the energy landscape has a high number of dimensions. The

energy landscape in figure 6 only has two dimensions and thus the memories

stored, in the corresponding associative memory, only have two attributes. Each

learned memory creates a local minimum in the energy landscape. These local

minimums are called attractors. In this concept, the input to the associative

memory is a position in the energy landscape, where information is stored as a

basin in the energy landscape. The retrieval of a memory can be seen as a

search for a local minimum in the energy landscape. The starting point for this

search is the input, which is similar to the memory that is going to be

retrieved. Although this view can be quite elusive, since we are talking about

a high dimensional space, it nonetheless gives an illustrative view of the way

an associative memory works.

Figure 6 An illustration of the energy

landscape that is produced by the associative memory. The basins, the lowest

points in this energy landscape, are called attractors. The attractors could be

said to constitute the memories in an associative memory. These types of

networks are referred to as attractor networks.

There are several ways an

associative memory can be constructed. The most common method is to use the

Hopfield model to construct an associative memory. In this thesis I will use an

advanced version of the Hopfield model, based on the laws of probabilities, to

construct associative memories.

The idea behind the Hopfield

model is largely based on Donald Hebb’s well known work: Assume that we have a

set of neurons, which are connected to each other through connection weights

(representing synapses) [24]. In the discrete Hopfield model, the neurons can

either be active or non-active. When the neurons are stimulated with a pattern

of activity, correlated activity causes connection weights between them to

grow, strengthening their connections. This makes it easier for neurons that in

the past have been associated to activate each other. If the network is trained

with a pattern, and then presented with a partial pattern that fits the learned

pattern it will stimulate the remaining neurons of the pattern to become

active, completing it. If two neurons are anti-correlated (one neuron is active

while the other neuron is not) the connection weights between them are weakened

or become inhibitory. This form of learning is called Hebbian learning, and is

one of the most used non-supervised forms of learning in neural networks.

The Hopfield network consists of

a set of neurons and a corresponding set of unit delays, forming a

multiple-loop feedback system [9]. If N is the number of neurons in the network, the

number of feedback loops is equal to N2-N. The ”–N” expression

represents the exclusion of self-feedback. Basically, the output of each neuron

is fed back, via a unit delay element, to each of the other neurons in the

network. Note that the neurons don’t have self-feedback. The reason to this is

that self-feedback would create a static network, which in turn means a

non-functioning memory.

Each feedback-loop in the

Hopfield network is associated with a weight, wij. Since we had N2-N

feedback loops, we will have N2-N weights. Imagine that we have P

patterns, where each pattern, xm, is a vector containing the values 1 or –1. Then

a weight matrix can be constructed in the following manner:

where m is index within a set of

patterns, P is the number of patterns, and N is the number of units in a

pattern (N is the size of the vectors in the set { xm

}). The patterns represent the activation of the neurons. The neurons can be in

the states oi Î ±1.

To recall a pattern (of

activation), oi, in this network we can use the following update

rule:

If the underlying network is

recurrent the process of recollection is iterative. This iterative process,

where the instable and noisy memory becomes stable and clear, is called relaxation.

Since the network will have a

symmetric weight matrix, wij, its possible to define a energy

function called Lyapunov function [25]. The Lyapunov function is a finite-valued function

that always decreases as the network changes states during relaxation.

According to Lyapunov’s Theorem 1, the function will have a minimum somewhere

in the energy landscape, which means the dynamics must end up in an attractor.

The Lyapunov function is for a pattern x defined by:

The Hopfield model constitutes a

very simple and appealing way to create an associative memory. The model have a

problem called catastrophic forgetting.

Catastrophic forgetting occurs when the Hopfield network is loaded with

too many patterns. It can be said to occur when there are to many basins in the

energy landscape. If the network is loaded with to many patterns, errors in the

recalled patterns will be very severe. The storage capacity of the Hopfield

network is approximately 0.14N patterns, where N is the numbers of neurons in

the network [25].

The Hopfield model can also be

made continuous. The model is then described by a system of non-linear first-order

differential equations. These equations represent a trajectory in state space,

which seeks out the minima of the energy (Lyapunov) function E and comes to an

asymptotic stop at such fixed points in analogy with discrete Hopfield model

presented.

The standard correlation based

learning rule used in the Hopfield model, suffers from catastrophic forgetting.

To cope with this situation Nadal, Toulouse and Changeaux [26] proposed a so-called marginalist-learning

paradigm where the acquisition intensity is tuned to the present level of cross

talk “noise” from other patterns. This makes the most recently learned pattern

the most stable. New patterns are stored on top of older ones, which are

gradually overwritten and become inaccessible, a so-called “palimpsest memory”.

This system retains the capacity to learn at the price of forgetfulness.

Another smoothly forgetting

learning scheme is learning with in bounds, where the synaptic weights wij are bounded –A £ wij £ A. This learning scheme

was proposed by Hopfield [9]. The learning rule for training patterns xn

is

where c is a

clipping function

The optimal capacity 0.05N is

reached for A »

0.4 [27]. For high values of A, catastrophic forgetting

occurs, for low values the network remembers only the most recent pattern. This

implies a decrease in storage capacity from 0.14N of the standard Hopfield

model. Total capacity has been sacrificed for long-term stability.

As previously discussed, there

are several approaches to creating a memory in a neural network context. This

thesis uses associative memories with, palimpsest properties and a structure

with hypercolumns based on a Bayesian attractor network with incremental

learning. This memory model is used because it is a good model of the

structures in the cerebral cortex and at the same time comparably simple. The

model also makes sense from a statistical viewpoint.

The artificial neural network

with hypercolumns and incremental, Bayesian learning developed by Sandberg, et

al. [10] is a development of the original Bayesian artificial

neural network model developed by Lansner et al. [28-30] , which was developed to be used with one-layer

recurrent networks.

The Bayesian learning method is

a learning rule intended for units that sum their inputs multiplied by weights

and use that sum to determine, by using a non-linear function, their output

(activation). This is much like many other algorithms for artificial neural

networks. The weights in a Bayesian network are set in accordance with rules

derived from Bayes’ expressions concerning conditional probabilities. This

means that the unit activation can be equated with the confidence in various

features. The rule is local, i.e. it only uses data readily available at either

end of a connection. The algorithm allows, in an easy way, to adjust the time

span over which statistical data is collected. The time span is adjusted by a

single variable, often called a.

Regulating the value of a

, and hence the time span for collecting statistical data, the plasticity of

the network is regulated.

The Bayesian learning rule can

be extended to handle continuous valued attributes. This have been done by

Holst and Lansner [30], using an extended network capable of handling graded

inputs, i.e. probability distributions given as input, and mixture models.

To deal with correlations

between units that cause biases in the posterior probability estimates,

hypercolumns were introduced [29]. A hypercolumn, named in analogy with cortical

hypercolumns [31], is a module of units that represent all possible

combinations of values of some primary features and hence provide a

anti-correlated representation of the network input.

Figure 7 Here is a small recurrent

neural network with the 6 neurons divided into three hypercolumns. Note that

there are no recurrent connections within each hypercolumn. With some

imagination it can also be seen how the weights wij form a

matrix.

I am now going to present a

continuous, incremental Bayesian learning rule with palimpsest memory

properties. The forgetfulness can

conveniently be regulated by the time constant of the running averages. This

implies that we easily can construct a STM or LTM memory with this learning

rule.

Bayesian Confidence Propagation

Neural Networks (BCPNN) are based on Hebbian learning and derived from Bayes

theorem for conditional probabilities:

where m is an attribute value of

certain class x. The purpose of calculating the probabilities of the observed

attributes for each class is to make as few classification errors as possible.

The reason we want to use Bayes theorem is that it is often impossible to make

a good estimate of P(x|m) directly out of the training data set. On the other

hand, a good estimate of P(m|x) is often possible to achieve. Next we will see

how this can be implemented in a neural network context.

The input to the network is a

binary vector, x. The vector x is composed of the smaller vectors

x1, x2,…xN. Each of these sections x1,

x2,…xN are representing the input to a hypercolumn. This

means that the input space, which represents all possible inputs to the

network, can be written as X = X1, X2,…XN

Each variable Xi can

take on a set of Mi different values. This means xi will

be composed of Mi binary component attributes xii’ (the

i’ th possible state of the i th attribute of xi) with a normalised

total probability

From the input, x, we

want to estimate the probability of a class or set of attributes y. (The class y is the output of

the network and the input, x, is seen as an attribute.) The vector y

has the same structure as the vector x. If

we condition on X (where unknown attributes retain their prior distributions)

and assume the attributes xi, to be both independent, P(x) = P(x1)P(x2)…P(xN), and

conditionally independent, P(x|y) = P(x1| y)P(x2| y)…P(xN| y),

we get:

where oii’

= P(xii’|Xi).

Since y can just be regarded as another random variable it can be

included among the attributes xi and there is no reason to

distinguish the case of calculating yjj’ from calculating xii’.

If X represents known or estimated information, we want to create a

neural network which calculates P(y),

from the given information. If we take the logarithm of the above formula we

get

(1)

(1)

Now, let the input X(t) to the network be viewed as a

stochastic process X(t,×) in

continuous time. Let Xii’(t) be component ii’ of X(t), the observed input. Then we can

define Pii’(t)=P{X ii’(t)=1} and P ii’jj’(t)=P{X

ii’(t)=1, X jj’(t)=1}. Equation (1) becomes:

(2)

(2)

Given the information

{X(t’),t’<t} we now want to estimate Pii’(t) and P ii’jj’(t).

This can be done by using current unit activity oii’(n) at time n with the following two estimators

where t

is a suitable time constant.

(3)

(3)

(4)

(4)

The estimator in equation (3)

estimates the probability of a single neuron to become active per time unit.

The estimator in equation (4) estimates the probability for two neurons to

simultaneously be active. L is the estimated probability per time unit or rate

estimated probability. This means that L is estimated from a

subset of the events that has occurred, whereas P is estimated from all events

that has occurred. The rate estimator explains the palimpsest property of the

learning rule.

These estimates can be combined

into a connection weight, which is updated over time. The bias can also easily

be stated:

(5)

(5)

(6)

(6)

The base for the logarithms is

irrelevant, but for performance reasons the natural logarithm is often the best

choice. Logarithms with other bases are often derived from the computation of

the natural logarithm.

The usual

equation for neural network activation is

(7)

(7)

where hj is the

support value of unit j, bj is its bias, wij the weight from

i to j and f(hi) the output of unit i calculated using the transfer

function f. The output f(hi) equals oii’ in equation (1)

and (2). In the basic Hopfield model the activation function, f(), is, as we

earlier saw, a step function.

The form in equation (2) is

slightly more involved then (7), and has to be implemented as a pi-s

neural network or approximated [29,

30]. The activation equation in the learning rule is

(8)

(8)

Comparing

terms in equation (8) and (2) we make the identifications

(9)

(9)

(10)

(10)

(11)

(11)

P(xii’|x) = oii’

= f(hii’) can be identified as the output of unit ii’, the

probability that event xii’ has occurred or an inference that it has

occurred. Since inferences are uncertain, it is reasonable to allow values

between zero and one, corresponding to different levels of confidence in xii’.

Since the independence

assumption is often only approximately fulfilled and we deal with

approximations of probability, it is necessary to normalise the output within

each hypercolumn:

(12)

(12)

The network is used with an

encoding mode where the weights are set and a retrieval mode where inferences

are made. Input to the network is introduced by setting the activations of the

relevant units (representing known events or features). As the network is

updated the activation spreads, creating a posterior inferences of the

likelihood of other features.

As we discussed earlier,

networks with update rules like equation (7) and symmetric weight matrices an

energy function can be defined, and convergence to a fixed point is assured [27]. In this case this does not strictly apply, but for

activation patterns leaving only one nonzero unit in each hypercolumn, it does

apply. In practice it almost always converges, even though there is no input.

In the absence of any

information, there is risk for underflow in the calculations. Therefore we

introduce a basic low rate l0. In the absence of signals Lii’(t)

and Ljj’(t)

now converges towards l0 and Lii’jj’

towards l20,

producing wii’jj’(t) = 1 for large t (corresponding to uncoupled

units). The smallest possible weight value if the state variables are

initialised to l0

and l20

respectively is 4l20,

and the smallest possible bias log (l0). The upper bound on the weights becomes 1/l0.

This learning rule is hence a form of learning within bounds, although in practice

the magnitude of the weights rarely comes close to the bounds.

The learning rule (equations

(3)-(6)) of the preceding section can be used in an attractor network similar

to the Hopfield model by combining them with an update rule similar to

equations (8)-(12). The activities of the units can then be updated using a

relaxation scheme (for example by sequentially changing the units with the

largest discrepancies between their activity and their support from other

units). One could also use a random or synchronous updating similar to an

ordinary attractor neural network, moving it towards a more consistent state.

This latter approach is used here. The continuous time version of the update

and learning rule takes the following form (the discrete version is just a

discreteisation of the continuous version using Euler’s method):

(13)

(13)

(14)

(14)

(15)

(15)

(16)

(16)

(17)

(17)

(18)

(18)

where t0 is the time

constant of change in unit state. a = 1/t is the inverse of the learning time constant; it is a

more convenient parameter than t. By setting a temporarily to zero the network activity can change

with no corresponding weight changes, for example during retrieval mode.

The use of hypercolumns in the

model presented implies that there will be no recurrent connections within a

hypercolumn of the network. Recurrent connections within a hypercolumn are

fully anti-correlated. The self-recurrent connection is fully correlated. Thus

the weights connecting the neurons within a hypercolumn would either be set to

their minimum or their maximum value.

Each neuron in the network will

have a bias that is derived from the basic set of recurrent connections.

Connections projected from other populations of neurons will not add any bias to

the receiving neurons, although it would make sense from a mathematical point

of view to include the bias in the projection.

Auto-associative memories based

on artificial neural attractor networks, like for example early binary

associative memories and the more recent Hopfield net, have been proposed as

models for biological associative memory [9,

32]. They can be regarded as formalisations of Donald

Hebb’s original ideas of synaptic plasticity and emerging cell assemblies. In

this view each neuron in the artificial neural network is thought to equal a

single nerve cell in the biological neural network. In figure 8 is an

illustration of an artificial neuron. With some imagination it is possible to

see the similarities with a nerve cell.

Figure 8 Depicted

here is an artificial neuron, and its functions. Some parallels to a biological

neuron are inferred in the figure. Note that the output is conveyed to several

other neurons.

Each connection weight, wij,

in figure 8 can be interpreted as a synaptic connection between two neurons. In

figure 9 one of these connections is depicted in more detail.

Figure 9 This figure shows a single

synaptic connection between two neurons in our artificial neural network. The

values of Li (=Pi,

Pj), Lij (=Pij),

and wij , derived in equations (15, 16, 18), can be interpreted as

shown in figure. Pj, Pi, Pij, are values

associated with synaptic terminals, synaps, dendrites ability to convey a

signal from cell j to cell i.

Although the above presented

view of each neuron corresponding to a nerve cell is appealing, it isn’t

realistic. Real neurons aren’t as versatile as our artificial neurons i.e. a

real neuron can’t impose both inhibition and excitation, which is stated by

Dalés law [33]. A better view of the correspondence between our

artificial neurons and real neurons is to think of our artificial neurons as

corresponding to a cortical column of real neurons. In our Bayesian attractor

network we have a structure of hypercolumns, where each hypercolumn is

corresponding to a group of cortical columns.

There are a couple interesting

concepts or functions I hope to find in the simulations of the memory systems

developed in the experiments. These

concepts originate from cognitive psychology.

In a memory that’s going to be

implemented in a decision making system, there’s a need not only to be able to

recall earlier events, but also be able to recall these events with current

situation data. This type of process is usually called short-term variable

binding (STVB) or role filling. To illustrate this concept I will

give an example:

John is visiting his

grandfather Sven. After he has visited his grandfather, John meets his two

friends, Max and Sven. When Max talks about Sven with John, John knows that the

Sven, Max is talking about isn’t his grandfather.

To achieve STVB in a system, the

system will of course need a LTM, and also some sort of STM that can

accommodate the temporary bindings. One of the main focuses of this thesis will

be to investigate how STVB can be achieved.

The chunking process is a

specialisation of the memory, which allows it to more effectively remember

certain things. The chunking learning process recruits a new idea to represent

each thought, and strengthens associations in both directions between the new

chunk idea and its constituents. Thus, the inventory of ideas in the mind does

not remain constant over time, but rather increases due to chunking. The

representation of a chunk is constructed out of its constituents. An example of

chunking is how the set { 1 2 3 } is remembered. The set can be remembered as

1, 2, and 3. The chunked version of the set is remembered as the number 123.

There are two primary reasons

for chunking: First, chunking helps us to overcome the limited attention span

of thought by permitting us to represent thoughts of arbitrary complexity of

constituent structure by a single (chunk) idea. Second, chunking permits us to

have associations to and from a chunk idea that are different from the

associations to and from its constituent ideas. This is very important for

minimizing associative interference.

In this thesis I have studied

how the representation of the short-term memories could be done more effectively.

I have also studied how these, efficient short-term representations associates

to the long-term representations. Although, the work of this thesis does not

directly focus on the chunking process, I thought it was interesting to mention

the similarities between the STM & LTM interaction and chunking.

3.0 Method

Since the simulations in this

thesis are based upon the Bayesian artificial neural network model developed in

[10], I tried to use similar settings and architectures.

In all simulations, the neural networks were first trained on a set of

patterns, and then tested. This means some consideration must be taken before

the artificial neural networks of this thesis can be implemented in a real-time

system.

The LTM was implemented as a

recurrent network consisting of 100 neurons divided into 10 hypercolumns with

10 neurons in each hypercolumn. This configuration of LTM was used throughout

the thesis with no exceptions. As for the STM, there were a couple of different

implementations in respect to the number of neurons and of hypercolumns. The

two most common implementations of the STM used 100 and 30 neurons

respectively. All the networks used, had at least one set of recurrent

connections. As earlier mentioned there were no recurrent connections within

the hypercolumns of the networks, as the internal representation in a

hypercolumn is supposed to be completely anti-correlated.

Almost all systems were

constructed under the assumption that the input to the systems always passed

the LTM before it entered the STM. The output from the system was always

extracted through the LTM. When the systems are used and not only tested, all

input/output is handled by the LTM. In a real-time system, the data presented

to the STM will always be delayed. Since the simulations in this thesis were

not run in real-time, there was no need to be concerned about this delay. The

LTM exerted a disruptive influence on the STM during training. The STM exerted

a disruptive influence on the LTM during operation. In chapter 5 I investigated

these interferences.

The recurrent connection within

a network and the connections between networks are called projections. A

projection does not only represent the physical connection, the concept also

incorporates the connection-weights. Each connection, between two neurons, is

equipped with two weights that represent the correlation between the neurons in

both directions. Since the networks are auto-associative, the weights are equal

in both directions. This does not apply to the connections between two

networks, where hetro-associations may arise. In the models, a matrix

represents the projections. The bias was not included in the projections.

The input to the artificial

neural networks was vectors of binary numbers (0 and 1). These input vectors

were constructed in respect to the hypercolumn structure of the LTM. This meant

that only one out of ten neurons in a hypercolumn structure was activated and

this was always the case. So the whole input of ten hypercolumns only caused

10, out of 100, neurons to be activated. The input could therefore be

considered sparse. The sparseness of the input affect the storage

capacity of the network. If the input is to “dense”, it will affect the storage

capacity negatively. In every run a new set of patterns was generated. The

patterns were generated from a rectangular probability-density function.

In chapter 4 the input always

consists of sets with 100 patterns. In chapter 5 the input always consists of

sets with 50 patterns. Chapter 6

contain experiments with structured data. Therefore the input is sets with

different number of patterns. The number of patterns in an input set does not

affect the STM since it forgets so quickly. The LTM is affected by the size of

the input set. This is more thoroughly explained in 3.2.

As mentioned earlier, the

systems designed in this thesis were not operated in “real-time”. The systems

had a training mode, where the memories were stored in the system. Then, during

operation mode the memories were retrieved. The system design outlined in

figure 10 was the most frequently used design. In biological memory systems the

theta rhythm may control the switch between training and operation mode of the

memory network.

Figure 10 In all

simulations, the artificial neural networks were first put in a training

mode and were trained with a set of patterns. When the training phase was completed, the networks were put in operation

mode, and tested. Note that non-conducting static projections are not

depicted in the figure (training mode).

The differential equations 13,

15 and 16 have been solved with Euler’s method. The time-step was chosen to

h=0.1, and the integrations lasted for 1 unit of time. (This meant that 10

steps were taken during integration from 0 to 1.) It often took much longer

time than one time unit to train or retrieve a pattern properly. In the case of training, a strong memory of

a single pattern was achieved trough repeated presentation of the pattern. The

relaxation process that occurs during operation mode was almost never fully

completed. (Fully completed mean that no more changes would occur if the process

was extended.)

During the training mode the

equations (15) and (16) were solved for each network in the system. Equations

(17) and (18) were then used to compute the bias and the projections for the

networks. In the case of projections between two networks, the same equations

were applied with the exception of equation (17). The bias was chosen not to be

included in the projections between networks. This could of course be

discussed. A biological interpretation of this is that the whole dendritic tree

of the synapse is given the same bias value. This means that synapses close to

the soma are not given any priority. In a real neuron, synapses closer to the

soma generate a stronger signal then synapses further out in the dendritic tree

[34]. Mathematically it also makes sense to incorporate

the bias values into the projection, even though this was not done here.

The three main parameters that

controlled the network during training mode were the value of a, the

number of patterns and the time spent training each pattern.

The main interest of this thesis

was not the retrieval-performance of the networks. The main interest was to

prove that networks with different time-dynamics could be used in the same

system. However, retrieval-performance was of great importance when different

designs were investigated, to rate how good the designs were.

To initiate the retrieval of

patterns (memories) the networks were usually presented with a copy of the learned

pattern with 2 errors. (The content of two of the hypercolumns were altered.)

In the figures describing the systems, this type of input is denoted as “Input

with errors”. In some experiments of chapter 5 and 6 the networks were

presented with only a few of the hypercolumns of the learned patterns. The

hypercolumns that were not presented to the network were filled with

zeros.

The plots over single networks

were constructed from 50 runs. In the plots of several networks, each data

point was often constructed from 20 runs. The data presented in the tables were

accumulated from 100 runs of the networks.

During testing, the networks

were put in operation mode. The equation (13) and (14) were used in order to

perform the relaxation. The relaxation process was always one time unit long.

When a network had a projection from another network, equation (13) was

replaced with equation (19). Equation (19) introduces the constant gain factor g.

The value of g varied around 1. The purpose of g was to introduce an instrument

to control the influence that connected networks imposed on each other. The

direction of the projections that g applied to was denoted, i.e. gSTM®LTM (In this case the connection from the

STM to the LTM is scaled with g.)

(19)

(19)

Here wsii’jj’ denoted

the connection-weights and osjj’ the activity pattern of the sending

neurons. Ns is the number neurons in the sending network.

Successful retrieval was defined

as the fraction of patterns, which were correctly recalled after relaxation to

a tolerance of 0.85 overlap. In the normal case where the input consisted of 10

hypercolumns, a recalled pattern was only allowed to differ in one hypercolumn

from the original pattern to be classified as correct. The retrieval ratio of

the system was often plotted as a continuous line. The retrieval-ratio of the

subsystems were often also plotted, i.e. the LTM (plotted as a dotted line) and

the STM (plotted as a dash-dotted line).

In the neural network model at

hand there are several parameters that can be chosen more or less arbitrarily.

As mentioned earlier, the choice of these parameters is consistent with [10]. In this section the default values of the parameters

are listed. These default values are common among many of the simulations. When

new constants are introduced or when the default values are altered it has been

mentioned it in the text.

In all simulations, the value

0.001 is used for l0.

l0

can be seen as the background noise in the neurons. l0

also have implications on the maximum excitation that can be conveyed from one

neuron to another.

The experiments of chapter 4

were run with 100 patterns. The capacity for an optimal trained LTM with 100

neurons is about 60 patterns. The LTM in the experiments of chapter 4 had a set to a =

0.0005. This low value of a

implied that the LTM of chapter 4 could not form properly “deep” attractors

after training for 1 unit of time. These two conditions generated a situation

where the memories stored in the LTM had a small chance of correct retrieval.

Although all trained memories left some sort of trace in the LTM. Chapter 4

investigated the possibility of using a STM to extract those memory traces.

Chapter 5 & 6 contains

experiments where the systems where presented with 50 patterns and the LTM was

run with a

= 0.005. This setting of a

allowed the LTM to learn all 50 patterns.

The STM networks in this thesis

always had the value of a

set a

= 0.5. I tried to choose a

in such a way that the STM remembered the 10 most recent patterns presented to

it. This means that the STM wasn’t affected if the number of patterns presented

to it was 50 or 100. Contrary to the LTM of chapter 4, there were no memory traces

stored in the STM of the first patterns in the training set.

A1 B1 C1

A2 B2 C2

Figure 11 Three connection-weight /

projection matrices. .A1 is from a STM. B1 is from a LTM of chapter 5 & 6.

C1 is from a LTM of chapter 4. The strength of the connection-weights is

colour-coded in A1, B1 and C1 between the logarithmic values 0 and 5. The

brighter a dot is, the stronger the connection. The diagonals of the matrices

all have black squares, showing the absence of connections within a

hypercolumn. A2, B2, C2 are the

corresponding distributions of the connection-weights. The vertical line seen

in A2, B2, C2 represents the 1000 self-recurrent connection-weights that have

been deleted.

There was a difference in the

way the projections were set up in a network with a large value of a compared to how the

projections were set up in a network with a small value of a. In a net trained with a

small value of a

(LTM) I found that the distribution of the inhibitory and excitatory weights

was very even and distinct. In figure B2 and C2, one can see that the

connection-weights are either set to be inhibitory or excitatory. There are not

many connection-weights with a value between the two groups of inhibitory and

excitatory connection-weights. While in a network trained with a large value of

a

(STM), the values of the connection-weights were evenly distributed between

inhibitory and excitatory connection-weights.

When I coupled a STM to a LTM,

the STM memory had an interfering effect on the neurons in the LTM. This meant

that the LTM had a smaller probability to relax to the correct pattern. To

prohibit this impairment of the LTM, I introduced a gain constant, gSTM®LTM,

between the STM and the LTM (equation (19)). In the systems that were trained

with 100 patterns the value of gSTM®LTM

was set to 0.03 and in the systems trained with 50 patterns the gSTM®LTM

was set to 0.1. These values were derived from trial and error processes and

they seemed to give the STM a reasonable influence on the LTM.

4.0 Network

structures

Chapter 4 investigate the basic

concepts of connected networks. In the first part of this chapter, the

importance of having recurrent connections with different plasticity kept in

different networks (having a separate STM and LTM) is studied. Then, plastic

connections are studied. It is also studied how plastic connections can be

constructed and used.

The systems in the experiments

of this chapter were trained with sets of 100 patterns. The LTM were trained

with a set

to 0.0005. The LTM had a poor retrieval-ratio of about 0.3. The choice of a meant that all patterns

in the training set were remembered, but very poorly.

The information in a neural

network is stored in the projections. Two systems were studied here. The two

systems had an equal number of connections, but different number of neurons. N

is equal to 100.

The first system, a network of N

neurons had two projections with a total of 2N2-20N connections.

Figure 12 The networks A and B are connected

with one-to-one connections. The connection-weights, wi, were

usually set to a value around 10.

The second system had two

separate networks, with 2N neurons and 2N2-19N connections. The

neurons between these two networks were connected one-to-one projection. This

meant that all elements, except the diagonal elements, in the projection matrix

were set to 1.

The question was, which of these

two systems had the best design. The systems used approximately the same amount

of connections. Since memories are stored in the connections, this comparison

seemed motivated. (Chapter 5 describes how the design of the second system is

made more effective.)

Naturally, a neuron takes much

more space and uses much more resources than a connection between two neurons.

This means that if the number of neurons in a network can be minimized at the

expense of more connections in the network, it is a good thing. The system, in

this experiment, used few neurons and a moderate number of connections.

Real synapses may posses both

low and high plasticity properties. In this experiment the two projections with

different plasticity can be seen to form a single projection that have both low

and high plasticity properties.

The system was based on a LTM. A

“STM” projectionLTM®LTM with high plasticity was added to the system’s

existing “LTM” projectionLTM®LTM with low

plasticity. The high plasticity projection used a set to 0.5 and the low plasticity projection used a set to 0.0005. The bias

values were derived from the training of the low plasticity projection,

“LTM”. The projection with high

plasticity was scaled down with g = 0.03. The value of g

was chosen after evaluating the results of the experiment in section 4.2.1. The

system was trained with equation (19) instead of equation (13).

Figure 13 The operation modes of the

system. The bias values were derived from the projection with low plasticity.

The system was constructed with two projections with different plasticity. Each

of the projections, were also treated as individual networks (LTM and

STM).

The retrieval-ratio of the

system is shown as a solid line in figure 14. The systems two projections, with

high and low plasticity were used to create one separate LTM and one separate

STM. The separate retrieval-ratio of the LTM is shown as a dotted line, and the

retrieval-ratio of the STM is shown as a dash-dotted line, in figure 14. The

following text refers to this two (LTM and STM) individualised memories. These

two memories were isolated to provide a base for comparison of

performance.

The retrieval-ratio of the first

80 patterns was slightly lower for the system than for the LTM. The

retrieval-ratio of the last 20 patterns was lower for the system than for the

STM. The system seemed to provide compromise of the retrieval-ratio between the

LTM and STM. Since the high and low plasticity projections in the system were

interacting during the iterative process of relaxation there was a problem with

interference between the two projections. The STM interfered the LTM, during

retrieval of the first 80 patterns. The STM did not have enough influence over

the LTM to control the relaxation process completely, during the last 10

patterns.

It was interesting to see that

the system was able to retrieve patterns 85-90 with slightly higher

retrieval-ratio than the LTM or STM. During the retrieval of these five

patterns the LTM and STM were able to cooperate. This proves that the basic

idea of having several projections with different plasticity in a single system

can be beneficial.

The compromise between a high

retrieval-ratio of the first and the last patterns was controlled by the value

of g.

Adjustments of g could not improve the projections ability to cooperate. These

suggested that this design, with two projections and one population of neurons,

was not optimal.

Figure 14 The

retrieval-ratio of the system is plotted as continuous line. The

retrieval-ratio of the LTM is plotted as a dotted line, and the retrieval-ratio

of the STM as a dash-dotted line. Note the increased retrieval-ratio of the

system for the last 10 patterns.

This system basically had the

same two projections as the system in the previous section. The big difference

between the systems was that each of the projections in this system was

projected at a separate group of neurons. The purpose of this experiment was to

determine if it were beneficial too use two networks with different plasticity.

Figure 15 The system has a STM and LTM of

equal size. 1-to-1 connections were used to connect the STM to the LTM. The

input with errors was fed to both the LTM and STM. Output was extracted from

the LTM.

The system was composed a LTM

and STM of equal size. These two memories were connected with a 1-to-1

projectionSTM®LTM. The diagonal elements of the projectionSTM®LTM

were set to 10. This value was derived from a trial and error process seen in

figure 16. When the retrieval-ratio of the system was tested, both of the

networks were fed with input.

The trail and error process to

determine the value of the diagonal elements was performed with ten runs of the

system. For each run, the diagonal elements were set to different values. The

result of these 10 runs is shown in figure 16. The value of the diagonal

elements could have been set to any value between approximately 7-500.

If the diagonal elements, or

weights, had been set to one, there would not have been a connection between

the two memories. Figure 16 shows this fact. When the weights were set to 1

(equal to 0 on the logarithmic x-axel in figure 16) the retrieval-ratio of the

system becomes equal to that of the LTM.

And if the weights had been set to a value smaller than one, there would

have been an inhibitory effect on the neurons in LTM. If the weights had been

set to a value much larger than 500, the system would have shown good

performance on the latest learned patterns, but the system would not have been

able to recall the patterns learned in the beginning of the training set. This

is caused by the strong input from the STM, which makes it impossible for the

LTM to relax into a stable state.

Figure 16 The plot shows 10 runs, with

different values of the connection-weights. Performance is measured separately

for the first 1-90 patterns and the last 91-100 patterns. The dotted lines

represent STM and LTM separately. The solid lines show the performance of the

system.

In figure 17 the retrieval-ratio

of the system is shown. During the first 80 patterns the retrieval-ratio of the

system is equal to that of the LTM. Then, for pattern 80 to 90 the

retrieval-ratio is better than both that of the LTM and STM. During the last 10

patterns the retrieval-ratio is equal to that of the STM.

It was interesting to see that

the cooperation between the two memories (projections in the previous system)

was functioning well. The disruptive influence of the STM on the LTM was almost

negligible. The retrieval-ratio of the last 10 patterns was almost 1. A good retrieval-ratio

of the most recent patterns is necessary when the STM is to be used as a

working memory.

The combination of these facts

proved that the design with two individual networks with different plasticity

was superior to the design of the system in the previous section.

The STM has a strong influence

on the LTM. The STM has the ability to both support and suppress memories in

the LTM with great efficiency. This is an important feature, since it provides

a way to increase the importance of the latest learned patterns. Later on in

this thesis these properties are used to generate useful functions in systems.

Systems with STM are designed to prove the possibility of constructing a

working memory. The STM also provides

the possibility to make reinstatements of the latest memories into the LTM.

Figure 17 A system was based on a STM and

LTM of equal size. The STM and LTM were connected with one-to-one connections

between the neurons of each network.

The experiments presented in

this section were designed to investigate how a system built upon two networks,

could be connected with plastic projections. Both of the networks, LTM and STM,

were of equal size in all the simulations preformed. Different ideas, of how to

utilise the plastic projections were investigated.

There are many connections

between the neurons in the cortex, especially between neurons that are close

together. It seems very unlikely that these neurons are hardwired and unable to

form new connections or delete old connections. In this experiment, the neurons

of the two networks (STM & LTM) were allowed to form whatever connections

they wanted. As with the recurrent connections, these connections can be made

with different plasticity’s.

The connections between the STM

and the LTM were in this experiment plastic. The projectionSTM®LTM

matrix was no longer diagonal of weights. Instead it was a full matrix of

weights, representing all possible wirings between the neurons of the two networks.

When the size of the STM differs from that of the LTM or when the pattern

representation in the STM differs from that in the LTM, there is a need for a

plastic projection. The plastic projections were trained with the same Bayesian

learning rule that was used to train the networks recurrent projections. The

projectionSTM and the projectionSTM®LTM

were trained with a set

to 0.5. The system’s training and operation mode is seen in figure 18. The

projectionSTM®LTM was scaled down with gSTM®LTM

= 0.03.

Figure 18 The system used in the

experiments of this section. Note the added plastic projection from the STM to

the LTM. The plastic projection is a full matrix (100x100) of weights.

To determine the

value of gSTM®LTM a trial and error process was used. Figure 19

shows 10 runs of the system, with different values of gSTM®LTM

in each run. G was set to the value 0.03 which corresponds to

approximately -3.5 on the

logarithmic scale of figure 19. If the value of g is set to a smaller

value, the retrieval-ratio of patterns 91-100 is decreased. If the g

is set to a larger value than 0.03 the retrieval-ratio of patterns 1-90 is